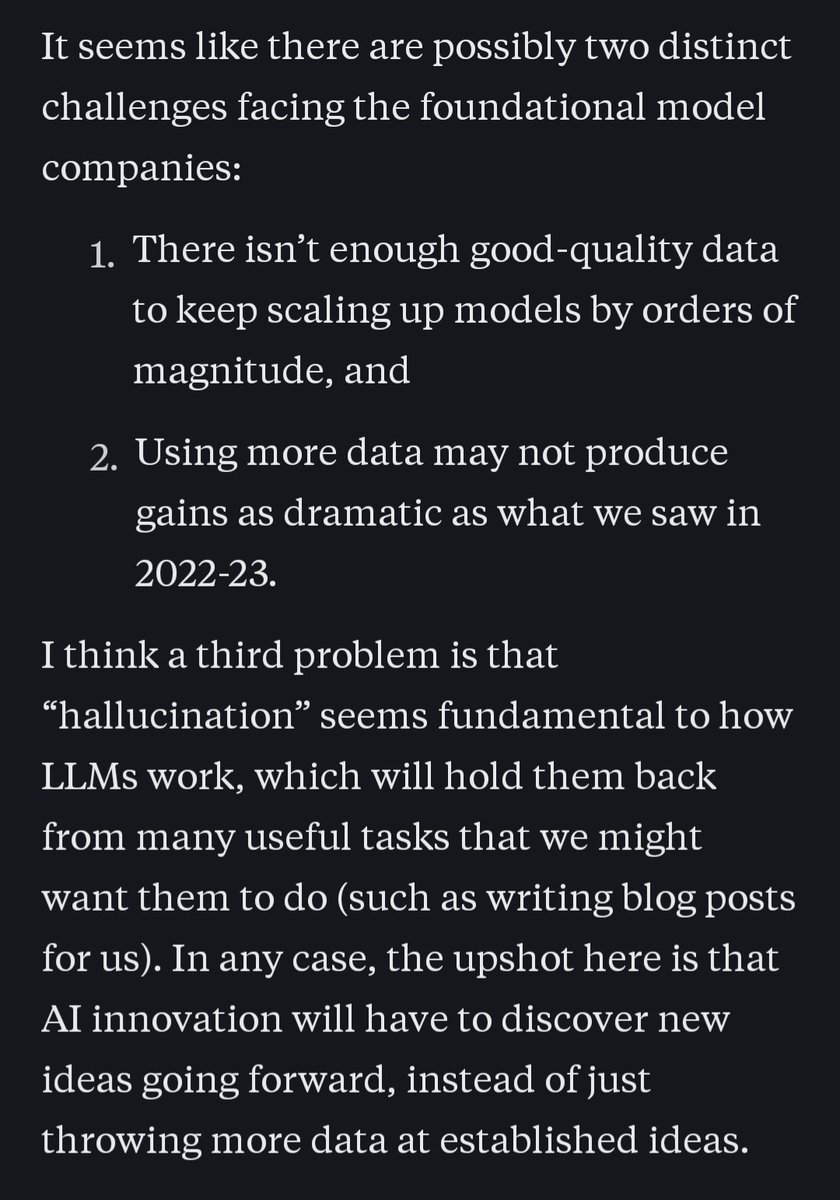

This from Noah Smith is representative of a common misunderstanding about AI right now. There are distinct challenges, but they’re not about data. We can get data. The world is made of data and we have put sensors all over it. And we can synthesize data through self-play.

The problem is not hallucination either (although that’s a convenient thing for a blogger to believe 😉). The problem is getting enough compute and electricity to run giant training runs that have a non-zero chance of failing for mysterious reasons. There are only so many investors.

And only so many billions of dollars available for speculation. Oh, and there’s only a limited amount of the magic crystals we call GPUs, and everybody’s in a perpetual bidding war over them. And we might start an actual war that threatens the only place where they make them.

Then once you do have enough magic crystals and enough power to run them consistently for weeks or months, and if your ritual doesn’t fail, then you get this demon that’s smarter than almost anybody on Earth. How do you prove that? And how do you control such a being?

Because this thing is super smart. Or even if it’s only as smart as a grad student, how do you convince a grad student to only summarize emails and answer random questions and never tell the customer to unalive themselves? How do you prove that it will be a good little employee?

It’s expensive to run the models too, once they are trained. The demon needs to be put to work. Maybe “unalive yourself” is fine for internal use but how do you use it to make money? Maybe more importantly, how do you use it to convince investors to give you another OOM of cash?